Interpretable Multi-Hop Reasoning on Knowledge Graphs

Project Overview

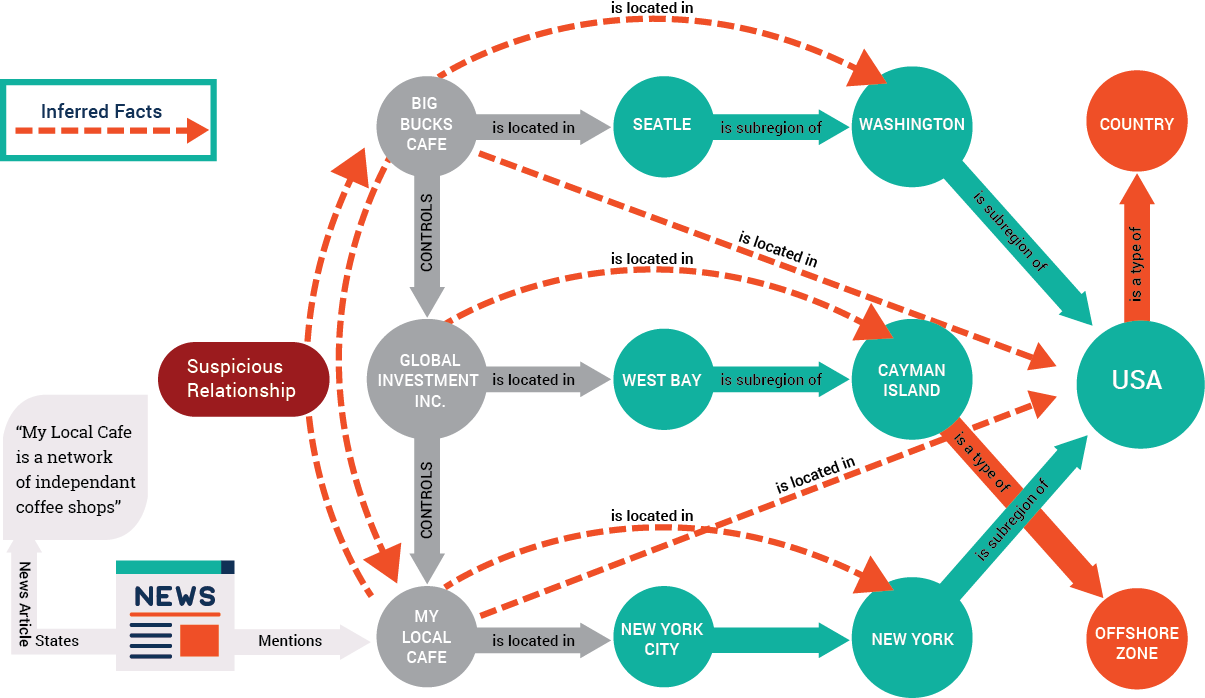

We aim to enable interpretable multi-hop reasoning on knowledge graphs with a novel RL model. We divide our work into two parts: proposing a robust policy and reward signal, and providing interpretable explanations for the model's decisions. To guide the agent's behavior, we explore probabilistic models for the policy, and design a reward signal that balances exploration and exploitation rewards. Finally, we address the interpretability challenge by representing the reasoning process in a transparent way and generating human-readable counterfactual explanations.

Tools

We are using following tools and frameworks in this Project.

Datasets

We are working on the following benchmark datasets.

Methods Used

We are utilizing the following machine learning techniques in this project.

- Reinforcement Learning

- GCN

- LST+Attention Networks

Related Works

DeepPath is a reinforcement learning method for multi-hop reasoning on knowledge graphs. It aims to enable automatic learning of complex reasoning patterns and representations, without relying on hand-engineered features or rules. DeepPath leverages a deep Q-network architecture to learn a policy for navigating the graph and generating query answers with high accuracy.

Never Give Up is a reinforcement learning method that learns directed exploration strategies for complex environments with sparse rewards. It uses a curriculum learning approach to gradually increase the difficulty of the exploration task and improve the agent's ability to discover new and valuable states. Never Give Up has demonstrated state-of-the-art performance on several challenging tasks, such as solving the Rubik's Cube puzzle and playing complex video games.

KG-BART is a model that combines the BART language model with knowledge graph augmentation to improve generative commonsense reasoning. It utilizes a graph-to-text generation approach that leverages knowledge graph information to generate fluent and coherent text that demonstrates commonsense reasoning. KG-BART has shown state-of-the-art performance on several benchmarks for commonsense reasoning, such as the SWAG and COPA datasets.

The Probabilistic Entity Representation (PER) model is a method for reasoning over knowledge graphs that uses a probabilistic approach to entity embeddings. It models the uncertainty in the knowledge graph by assigning probability distributions to entity embeddings, which enables it to handle missing or noisy data. The PER model has shown improved performance on several tasks, such as link prediction and entity classification, compared to traditional embedding-based methods.

Geometry Interaction Knowledge Graph Embeddings (GIKE) is a novel method for embedding knowledge graphs that models the geometrical structure of the graph using complex numbers. GIKE represents entities and relations as complex vectors and models the interactions between them as geometric transformations. The resulting embeddings have shown improved performance on several benchmarks for knowledge graph completion and link prediction.